|

Mingda WAN Short BioI am very fortunate to be advised by Prof. Tao LIN at LINs Lab. Previously, I worked with Prof. Yingyu Liang and Dr. Zhenmei Shi at UW–Madison. My research interests mainly focus on intersection of generative modeling and theoretical machine learning.

|

Selected Publications* denote alphabetical order. |

|

Unraveling the Smoothness Properties of Diffusion Models: A Gaussian Mixture Perspective

Yingyu Liang*, Zhizhou Sha*, Zhenmei Shi*, Zhao Song*, Mingda Wan*, and Yufa Zhou* ICCV 2025 paper |

|

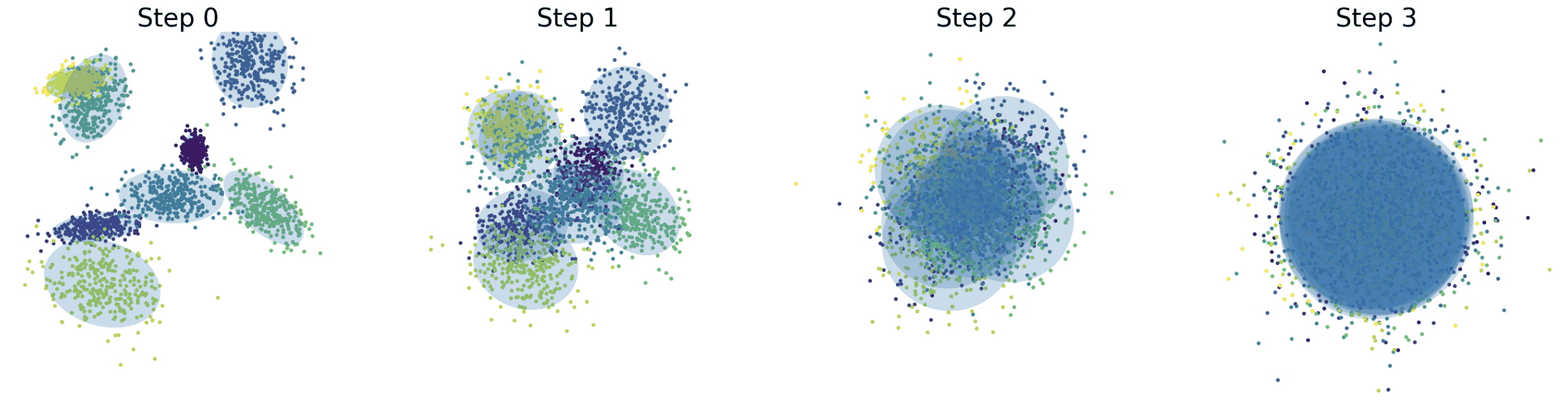

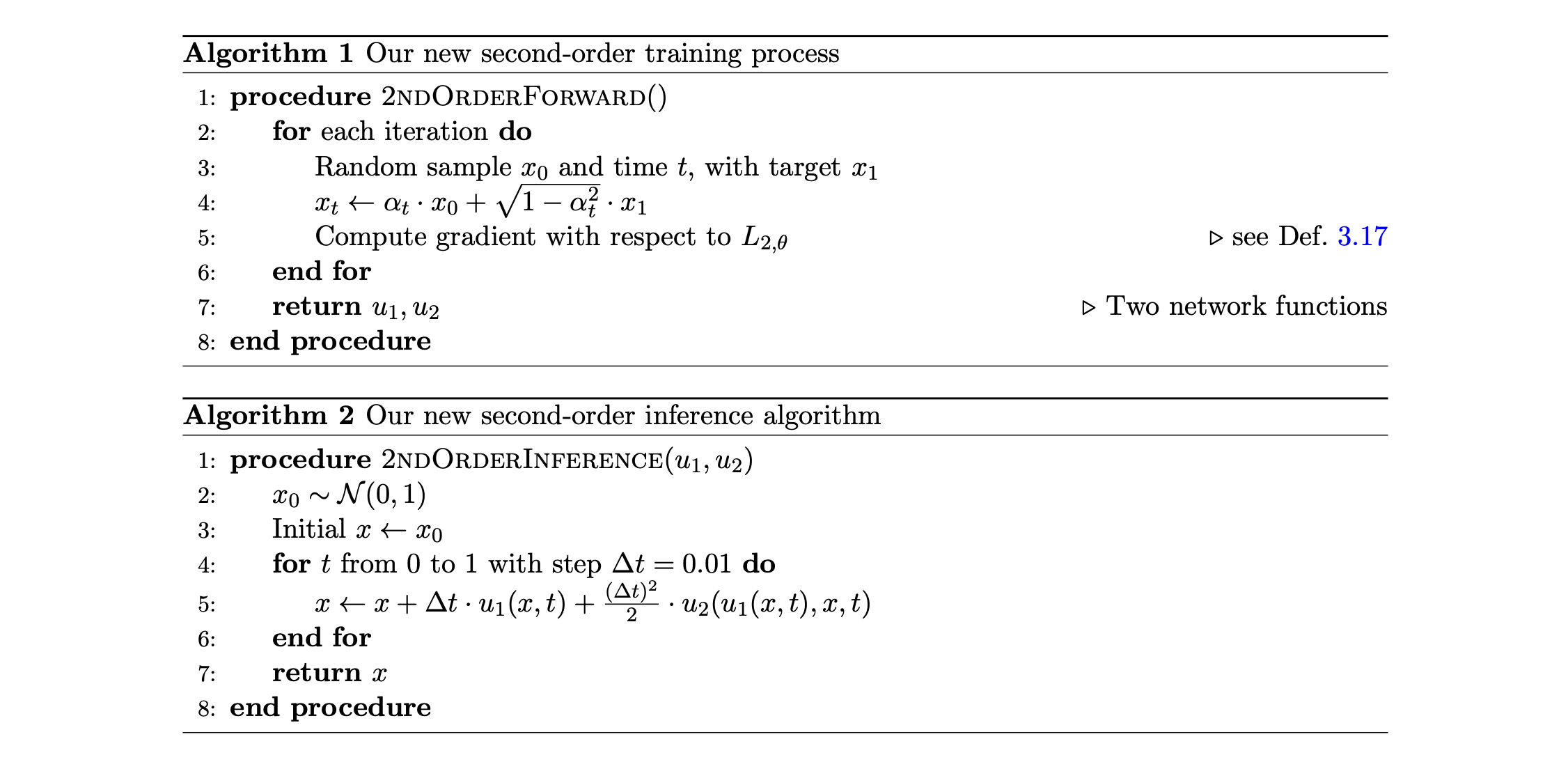

NRFlow: Towards Noise-Robust Generative Modeling via High-Order Flow Matching

Bo Chen*, Chengyue Gong*, Xiaoyu Li*, Yingyu Liang*, Zhizhou Sha*, Zhenmei Shi*, Zhao Song*, Mingda Wan*, and Xugang Ye* UAI 2025 paper |

|

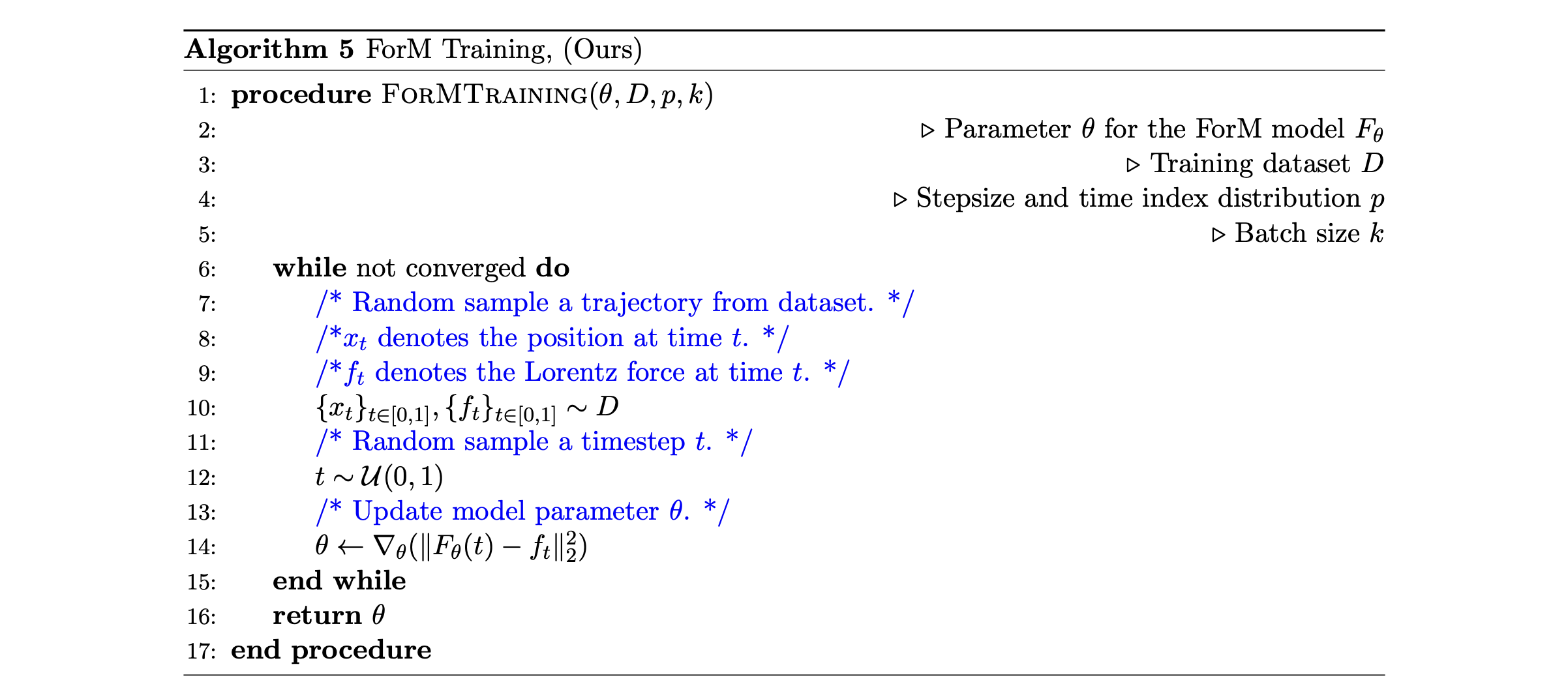

Force Matching with Relativistic Constraints: A Physics-Inspired Approach to Stable and Efficient Generative Modeling

Yang Cao*, Bo Chen*, Xiaoyu Li*, Yingyu Liang*, Zhizhou Sha*, Zhenmei Shi*, Zhao Song* and Mingda Wan* CIKM 2025 paper |

Academic Service |

|

External Reviewer

Research Grants Council (Hong Kong) |

|

Reviewer

ICCV 2025 KDD 2024 |

HobbiesDuring my spare time, you can always find me jogging, swimming, and fitness. I am also obsessed with classical novels, cinema, and progressive rock. |

|

Design and source code from Jon Barron. |